Propagandists used AI to create four images and deepfakes, presenting them as a single person

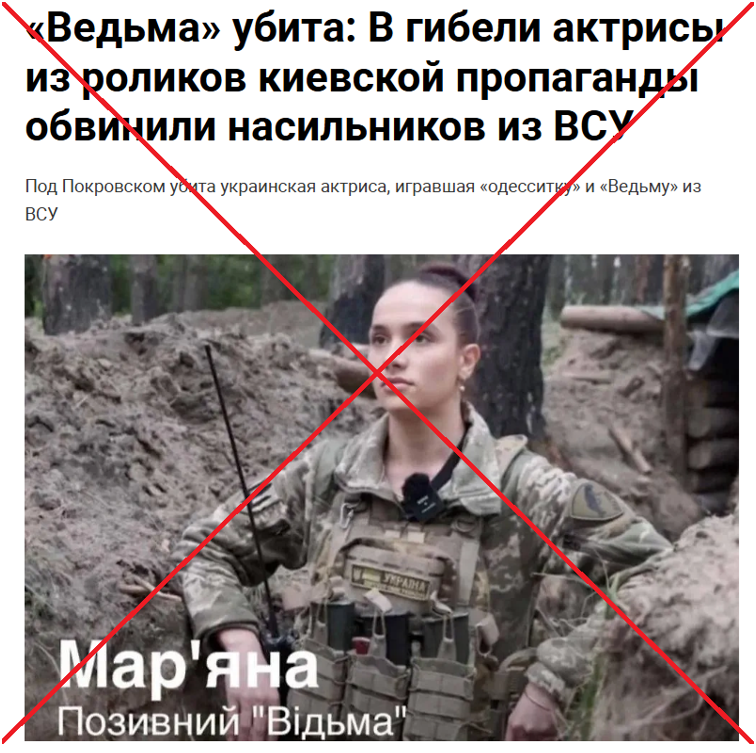

Russian state media, Telegram channels, and bots on social media platform X have circulated images of a supposedly deceased girl at the front, named Olena Savelieva. Propagandists claim she previously appeared in Ukrainian TV reports under various identities: a soldier named Mariana with the call sign “Vidma” (“Witch”), a “native of Odesa” named Kseniia, and “a displaced person from Toretsk” named Olesia. As “evidence,” Russians present screenshots from different Ukrainian TV segments. They also claim that Ukraine fabricates fake stories about Russian aggression and hires actors for these videos.

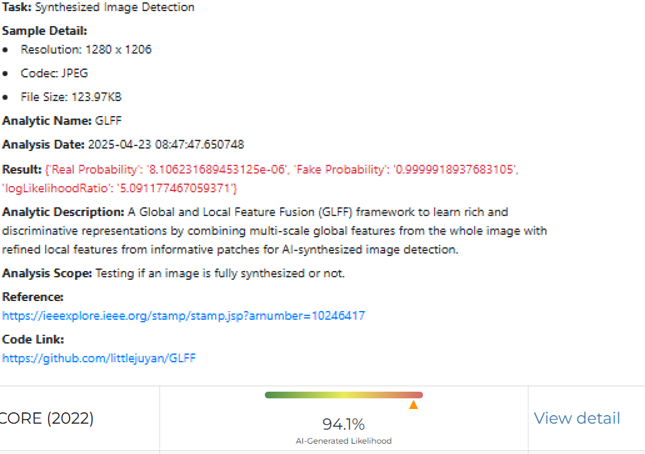

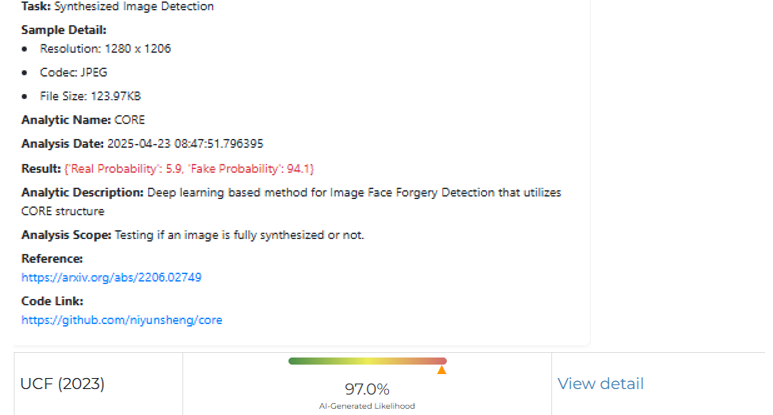

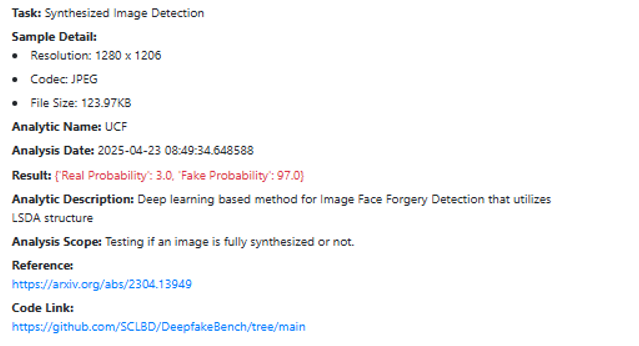

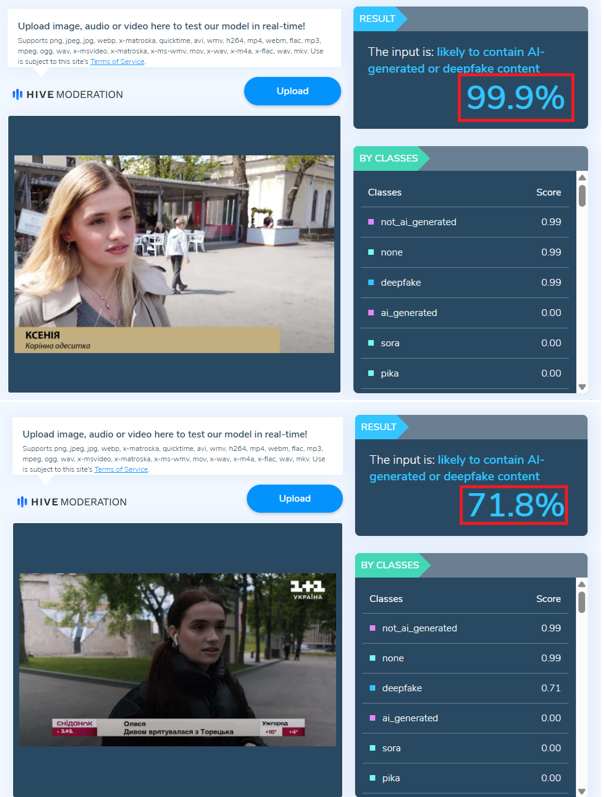

This is false. The face shown in the obituary of the so-called Olena Savelieva was generated using artificial intelligence and edited in a graphic editor in the style of the Ukrainian newspaper Kirovohradska Pravda. Ukrinform’s fact-checking team verified this using the Deepfake-o-meter application, which analyzes content for AI-generated deepfakes.

According to an analysis conducted using the tools A Global and Local Feature Fusion (GLFF), CORE, and UCF — designed to detect AI-generated images — the picture was found to be AI-generated with probabilities of 100%, 94%, and 97%, respectively.

It is worth noting that this “obituary” is not present on the social media pages of Kirovohradska Pravda.

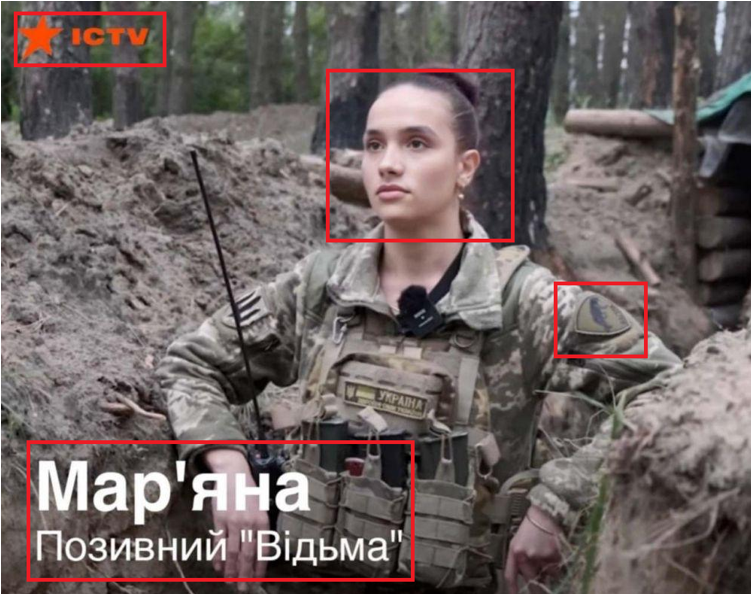

The photo of the soldier with the call sign “Vidma,” allegedly taken from an ICTV segment, depicts a different servicewoman. The original image was published on the DTF Magazine website in November 2023.

According to Hive Moderation, a fake detection tool, two of the other photos were found to have over 99% and 71% likelihood of being AI-generated.

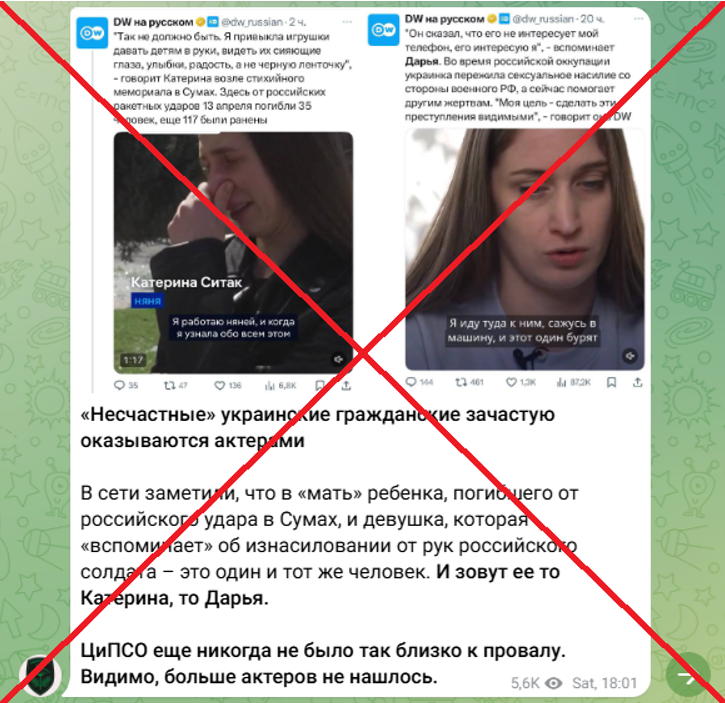

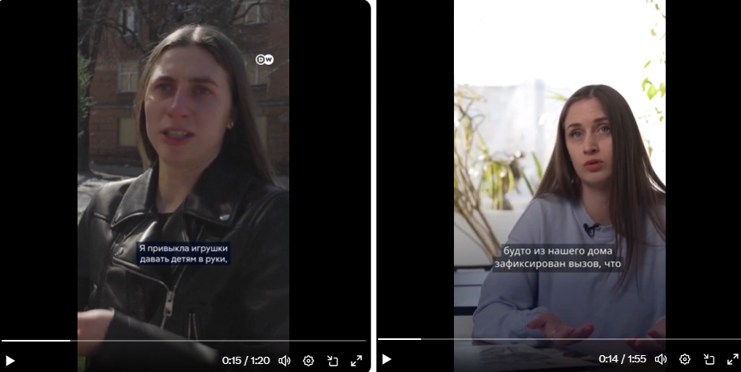

Additionally, Russian media outlets and social media bots have circulated two screenshots from news segments by the Russian service of Deutsche Welle. One report, from Sumy, features a woman named Kateryna Sivak speaking about children killed in a Russian attack on April 13, 2025. In another report, a woman named Daria shares her personal story of being raped by Russian soldiers during the occupation.

Russians claim in their posts that both women are the same person — an actress portraying both a grieving mother and a survivor of sexual violence — to allegedly discredit Russian troops.

This is false. Firstly, the two women do not resemble each other. The Russians deliberately used a profile photo of Kateryna to manipulate the narrative. Moreover, Kateryna is not the mother of the deceased children; she is a nanny who brought toys to the site of the tragedy.

Secondly, the woman from the other report is Daria Zymenko. She is an artist, designer, and activist with SEMA Ukraine, an organization supporting survivors of sexual violence.

Shortly after the full-scale Russian invasion, Zymenko moved from Kyiv to the Bucha district in the Kyiv region, where she ended up under occupation. Last year, she opened a photo exhibition in Kyiv based on her personal experience. A few months later, she spoke at a thematic conference in France.

Ukrainian investigative journalists have identified the Russian soldier allegedly responsible for her rape in 2022.

Kateryna has also been identified — she is indeed from Sumy, where she graduated from the A.S. Makarenko Sumy State Pedagogical University in January 2025.

Thus, Russian propaganda attempts to conceal its crimes against Ukrainians by cynically branding them as “fakes.” To this end, they promote the myth that Ukraine stages fake videos and scenes to discredit Russia. Another goal of this disinformation campaign is to sow distrust in both Ukrainian and international media.

Earlier, Russian propagandists spread a fake story about the “death” of a boy supposedly “at the hands” of Ukrainian troops in Russia’s Kursk region.

Andriy Olenin

Source: Russia spreads fake news alleging Ukraine hires actresses for news reports on Russian aggression